Introduction

I have rewritten two websites, with the original owners' permission, that were only available on the Internet Archive's Wayback Machine.

One was the HMS Gambia Association's website which was active from 2003, but disappeared in 2014. It was on the Internet Archive and now has a new site.

The other was the Bristol Gunners website which was active in 2008, and again from 2013 to 2016, Internet Archive and now also has a new site.

This page was written to show some of the things I learned while using the Internet Archive's Wayback Machine.

Finding the Pages

When using the Wayback Machine it is important to remember that the Internet Archive may not have captured every page or file from a website.

The Internet Archive has several Application Programming Interfacea (APIs) available, such as CDX. I found it just as easy to go the Internet Archive or Internet Archive's Wayback Machine home pages and either look for the site or related items from their search box.

If you already know which site you are looking for then you can use:

https://web.archive.org/web/*/[site-url]

For example:

https://web.archive.org/web/*/http://www.hmsgambia.com/

To find all the pages and resources of a site saved by the Internet Archive click on URLs menu item, which will give a paginated list.

Wayback Machine's menu

Alternatively, you can use:

https://web.archive.org/web/*/[site-url]/*

For example:

https://web.archive.org/web/*/http://www.thebristolgunners.webspace.virginmedia.com/*

Alternatively, you can use the CDX API to obtain the list by using:

https://web.archive.org/cdx/search/cdx?url=[site-url]/*

For example:

https://web.archive.org/cdx/search/cdx?url=http://www.thebristolgunners.webspace.virginmedia.com/*

URL Modifiers

The Wayback Machine's URL for a page can be modified by adding a code after the date. Depending on the page the codes will have different effects, but most will simple remove the Wayback Machine overlay, especially on older pages.

id_ will give you the raw page / image / javascript, etc, as originally archived without the overlay.

if_ this is a better option to use for viewing web pages with no overlay. It is meant for framed or iframed content pages.

im_ this is used for images.

cs_ this is used for css stylesheet files.

js_ this is used for javascript.

fw_ this is also meant for framed or iframed content. The overlay is removed.

oe_ this is for embedded content and works similar to if_ and fw_ , so no overlay.

mp_ this is for media content, and \similarly to oe_ / if_ / fw_

Using the CDX API

Format

CDX is a plain text file format developed by the Internet Archive and which summarizes a single web document. It has changed over the years, in 2006, a query to the database would return 9 fields or types of data; in 2015, it would return 11 fields. Today, in 2025, it returns 7. The fields are urlkey, timestamp, original (URL), mimetype, statuscode, digest, and length. The fields are separated by a space.

urlkey - Sort-friendly URI Reordering Transform (SURT) encoded URL. These URLS such as http://hmsgambia.org:80/books.htm turned into strings such as org,hmsgambia)/books.htm which makes them easier to sort.

timestamp - in the form YYYYMMDDhhmmss, such as 20180119005334. The time represents the point at which the web object was captured, measured in GMT.

original - the URL of the captured web object, such as http://hmsgambia.org/books.htm

mimetype - the mimetype of the captured web object, such as text/html, application/pdf, image/gif.

statuscode - the code returned from the server at the time of capture, usually 200.

digest - a unique hash of the web object's content at the time of the crawl. Objects with the same content will have the same hash or digest, for example IXSW6VD4KWXREVQAIIBT7MRKRSWVUTL4.

length - the size of the saved object in bytes.

Usage

You can use the Wayback Machine's CDX API to get all the URLs saved by the Wayback Machine. To use it use:

https://web.archive.org/cdx/search/cdx?url=[site-url]/*

For example:

https://web.archive.org/cdx/search/cdx?url=https://hmsgambia.org/*

This will return a text file which is 10,046 lines long, containing all 7 fields of data for each object saved by the Internet Archive, sorted by urlkey and date.

collapse - this will filter out records where the designated field is identical to another. Any of the fields can be collapsed, but probably the most useful is to collapse the digest as identical values means the page has not changed. For example:

https://web.archive.org/cdx/search/cdx?url=https://hmsgambia.org/*&collapse=digest

This will return a text file which is 2,068 lines long, containing all 7 fields of data for each object saved by the Internet Archive, sorted by urlkey but with only unique digest values.

Collapsing the urlkey will produce a list of unique objects saved by the Internet Archive, for example:

https://web.archive.org/cdx/search/cdx?url=https://hmsgambia.org/*&collapse=urlkey

This will return a text file which is 1,918 lines long, containing all 7 fields of data for each object saved by the Internet Archive, sorted by urlkey but with only unique urlkey values.

Suppose you only want a list of unique HTML files, then this can be done by collapsing the urlkey and filtering on the mimetype field:

This will return a text file which is 152 lines long, containing all 7 fields of data for each object saved by the Internet Archive, sorted by urlkey but with only the mimetype of text/html.

Suppose you only want a list of unique files that are NOT HTML files. Then you can use the ! operator in the query:

This will return a text file which is 1,819 lines long, containing all 7 fields of data for each object saved by the Internet Archive, sorted by urlkey but with only the mimetype that is NOT text/html.

Of course if you want a list of images saved by the Internet Archive you could use:

This will return a text file which is 1,739 lines long, containing all 7 fields of data for each object saved by the Internet Archive, sorted by urlkey but with only the mimetype of image.

You could be even more specific. If you just wanted a list of WEBP images saved you could use:

This will return a text file which is 21 lines long, containing all 7 fields of data for each object saved by the Internet Archive, sorted by urlkey but with only the mimetype of image/webp.

The order and type of fields returned can also be specified by using the &fl property. Suppose you just want a list of unique objects saved by the Internet Archive and their mimetype you could use:

This will return a text file which is 1,932 lines long, containing just the original and mimetype fields of data for each object saved by the Internet Archive, sorted by urlkey.

The timestamp property can be accessed using the keywords from and to. These can be used alone or together. Suppose you wanted the files saved in 2024 and 2025, you could use:

https://web.archive.org/cdx/search/cdx?url=https://hmsgambia.org/*&collapse=digest&from=2024&to=2025

This will return a text file which is 336 lines long, containing all 7 fields of just files saved by the Internet Archive in 2024 and 2025, sorted by urlkey.

The Internet Archive's Wayback CDX Server GitHub page has more details how you can filter or limit the amount fo data that is returned.

The results can be saved to a file, it's only text, and manipulated however you like, in a text editor, using something like PowerShell or as in the following exampe, a spreadsheet.

An Example Using the CDX API and Excel

I wanted to get a list of subdomains used by a university in its early web presence. Using a plain CDX query such as https://web.archive.org/cdx/search/cdx?url=https://university.edu/* was too much for the browser and it stopped working because so many results were being returned.

Reading through the GitHub page and knowing I just wanted the early URLs and their timestamp, I changed the query to:

https://web.archive.org/cdx/search/cdx?url=*.university.edu&collapse=urlkey&to=2005&fl=timestamp,original

I could have added a &output=json parameter, but did not. The query returned a little under half a million lines, but did not choke the browser. I copied and pasted the results into Excel. Then used the Data > Text to Columns function to split it, then sorted the sheet by the URL. I then created a new column and used a formula to extract the subdomains. The formula I used was:

=MID(B2, FIND("//", B2, 1)+2,FIND(".", B2, 7)-8)

I highlighted the column with the formula and used the Home > Fill > Down function to fill the rest of the column. This worked very well but I still had almost half million rows in the spreadsheet.

In a new column , I used the formula =UNIQUE(C:C) to get the unique values from the calculated subdomain column.

This may seem a little complicated and there are probably methods to do it more easily, but this worked and gave me a list of the unique subdomains used to work with.

Downloading the Pages

As the sites I wanted to save the pages from were fairly small, what I did was visit each of them in the Wayback Machine and put if_ in the Wayback Machine url after the date to remove the Wayback Machine overlays. For example:

https://web.archive.org/web/20160315145149if_/http://www.thebristolgunners.webspace.virginmedia.com/

I then saved each page by right clicking on it and choosing "Save as.." > "Webpage, Complete" which saves the page and the resources used by it.

If the sites were larger or I was short of time then I would probably look around for one of the Wayback Machine downloaders.

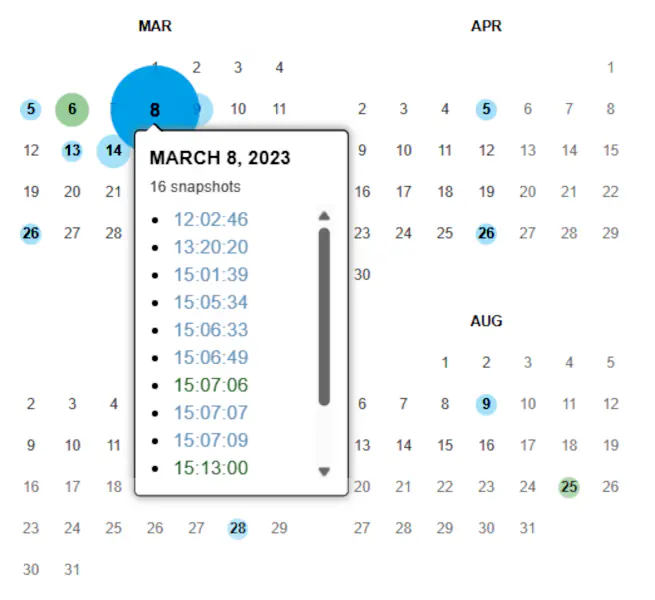

Calendar Colours

The dates that the Wayback Machine saved pages are colour coded, and the size of the dots indicate how many times that day the pages was saved.

Wayback Machine's colour coding

Blue means the web server result code the crawler got for the related capture was a 2xx (good).

Green means the crawlers got a status code 3xx (redirect).

Orange means the crawler got a status code 4xx (client error), and

Red means the crawler got a 5xx (server error).

Browser Extensions

I use the Wayback Machine fairly often and have tried several Chrome browser extensions for it. My favourite is the official Wayback Machine one.

Wayback Machine - the offical extension. It can look for the oldest and newest versions of the saved page and allows you to save particular pages.

Sources and Resources

5 basic techniques for automating investigations using the Wayback Machine - Medium

CDX and DAT Legend - Internet Archive

CDX Internet Archive Index File - Library of Congress

How to Recover your Content from Wayback Machine - InMotion Hosting

Internet Archive

Internet Archive Developers

Internet Archive's Wayback Machine

Is there a way to disable the top bar? - Reddit

The Ultimate Wayback Machine Cheat Sheet for OSINT, Cybersecurity, and Archival Research - LinkedIn

Using the Wayback Machine - Internet Archive's Wayback Machine

Wayback CDX Server - GitHub

Wayback CDX Server API - Internet Archive

Wayback Machine - Wikipedia